16. Building Models Recap

Lesson Recap

ND320 AIHCND C01 L04 A13 Lesson Overview

Lesson Recap

Lesson Recap

That's it! You have completed the content for this course! Well done! Let's recap what you learned in this lesson!

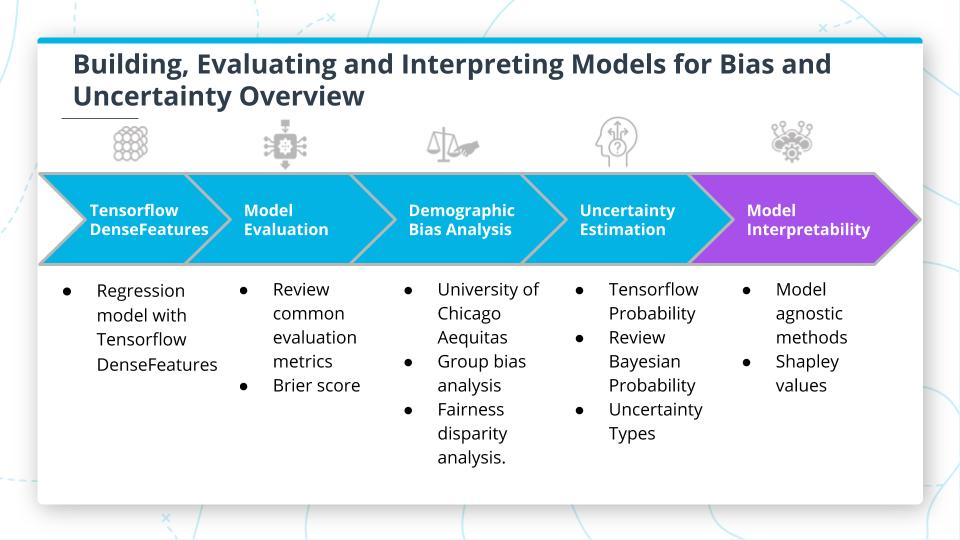

- You got hands-on using Tensorflow

DenseFeaturesfor a model. You usedDenseFeaturesto build a simple regression model. - You reviewed some common evaluation metrics for EHR models and then implemented Brier Scores to help evaluate your models better!

- You conducted a demographic bias analysis and used Aequitas for group bias and fairness disparity analysis to make sure that your models generalize better to real-world data.

- You used uncertainty estimation and Tensorflow Probability to build a BNN and reviewed some of the underlying concepts for Bayesian probability and types of uncertainty.

- You wrapped this lesson up by interpreting models with model agnostic methods like Shapley values.

You have learned so much in just this one lesson! Hopefully, it was both the most fun and most challenging part as well.

Lesson Key Terms

| Key Term | Definition |

|---|---|

| ROC | Receiver Operating Characteristic Curve or ROC curve that shows a graph of the performance of a classification model. It is the True Positive Rate Vs. False Positive Rate across different thresholds. |

| AUC | Area under the ROC Curve measures the entire two-dimensional area underneath the entire ROC curve. |

| Precision | The fraction of relevant instances among the retrieved instances |

| Recall | The fraction of the total amount of relevant instances that were actually retrieved. |

| F1 | Harmonic mean between precision and recall |

| RMSE | Root Mean Square Error- a measure of the differences between values predicted by a model. |

| MAPE | Mean Absolute Percentage Error is a measure of quality for regression model loss. |

| MAE: | Mean Absolute Error is a measure of errors between paired observations. |

| Unintended Biases: | A bias that is not intentional and often is not even apparent to the creator of a model |

| Prior distribution | P(A) |

| P(A ~ Evidence) | Updating the prior with new evidence |

| P(B) | Posterior probability |

| Aleatoric Uncertainty | Otherwise known as statistical uncertainty and are known unknowns. This type of uncertainty is inherent and just a part of the stochasticity that naturally exists. |

| Epistemic Uncertainty | Also known as systemic uncertainty and are unknown unknowns. This type of uncertainty can be improved by adding parameters/features that might measure something in more detail or provide more knowledge. |

| Black Box | Term often used in software when inputs go in and outputs come out of an algorithm and it is not clear how the outputs were arrived at. |

| Model Agnostic | methods that can be used on deep learning models or traditional ml models |